Raja S. Kushalnagar, Brian P. Trager, Karen J. Beiter and Noella A. Kolash

Information and Computing Studies, Rochester Institute of Technology, Rochester, NY 14623-5604

ABSTRACT

Deaf students often miss information in lectures due to watching simultaneous visual information. We developed an application to enable students to rewind this video in real-time and to review information in real-time that they otherwise would completely miss. We determined that the maximum playback rate students were comfortable with was approximately twice as fast as normal. The students reported higher satisfaction and more accurate answers in using this approach, which can be used in virtually any gathering for real-time review. Our study with 25 subjects, 10 females, ages 18 to 33 years, completed a balanced measure design to determine if there is a difference in perception between various replay speeds. The results of this study indicate that participants generally preferred to watch up to 2x replay and the preference for faster replay speeds dropped rapidly. These results may help serve as a guide to designers of assistive technology for deaf and hard of hearing students.

BACKGROUND

Deaf and hard of hearing (deaf) students need full visual access to classroom lectures to benefit fully from them, and federal laws mandate this equal access. Educational institutions satisfy the law and meet this need by providing accessible services that translate auditory information to visual information, such as sign language interpreters or captioners. These visual accommodations still do not provide equal access to deaf students as compared with hearing students. Specifically, deaf students’ graduation rate remains abysmal, at around 25% (Lang, 2002). In contrast, the 6-year nationwide graduation rate for hearing students nationwide was 56% (National Center for Higher Education Management Systems, 2008). Although modern classroom technology has contributed to the visual dispersion of the multiple information sources, technology can reduce these barriers, and benefit everyone, not just deaf participants. The replay feature addresses the associated loss of information processing.

Cognitive Load

The need to improve learning accessibility for deaf students is well documented (Antia, Sabers, & Stinson, 2007; Marschark, Lang, & Albertini, 2002; Marschark, Sapere, Convertino, & Pelz, 2008; Stinson & Antia, 1999). The passage of equal-access laws such as the Americans with Disabilities Act has vastly improved deaf student access to classroom information by providing visual accommodations such as captioners or interpreters as shown in Figure 1.

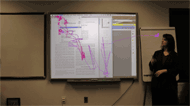

These visual accommodations still do not provide equal access to deaf students as compared with hearing students (Cavender, Bigham, & Ladner, 2009; Kushalnagar, Cavender, & Pâris, 2010; Marschark et al., 2005). The reason is that, as can be observed in Figure 1, deaf students have to simultaneously watch the visual translation of the lecture audio and the lecture visual, i.e., the slides. Hearing students, on the other hand can listen to the teacher while simultaneously following the slides or demonstration. For example, in Figure 2, the teacher is showing the students the steps needed to use a software application. In the three-second time-lapse snap shot of two students’ eye gaze paths, there is a clear difference between the hearing student’s eye-gaze path, shown in red, and the deaf student’s eye-gaze path shown in purple. The hearing student’s eye-gaze is closely following the mouse pointer and teacher’s auditory explanation on how to navigate. On the other hand, the deaf student’s eye-gaze is mostly focused on the interpreter, and then when the deaf student realizes the teacher has started to demonstrate program steps, switches gaze to the screen, but spends extra time searching for the mouse pointer and looking for contextual information.

In other words, the ability to look around as the teacher talks allows the hearing student to catch the teacher’s cues and immediately shift their attention between multiple visuals not within the narrow viewing focus. In contrast, deaf students catch the teachers’ cues only after these have been relayed through the interpreter or captioner, resulting in a delay in shifting attention between multiple visuals. Deaf students face difficulties in “managing and shifting attention” among these multiple sources, which remains an elusive goal. When the students’ view is poor or attention is poorly managed, information loss is likely to occur. If the student focuses on managing their own shift in attention from one focus to another, cognitive effort is shifted towards managing lower level attention management at the expense of higher order thinking skills (Mayer, Heiser, & Lonn, 2001; Mayer & Moreno, 1998). To manage attention to multiple sources within their visual fields, deaf students must do two things at the same time: overtly attend to the interpreter or captioning on the screen while covertly attending to changing stimuli in the learning environment. When attending to the interpreter, the deaf student depends on the interpreter’s cues (relayed from instructor’s auditory cues) and must be ready to disengage focus, shift and re-engage attention to a new source (e.g. slides or whiteboard). When attending to the captions, the deaf student relies on external cues that appear in the periphery (i.e. changing slides) and shift attention to the instructor or slide/whiteboard. As all people have a relatively narrow field of view of around 10 degrees, people have to move their heads, which can be exhausting. By consolidating views closer together and by providing support for low-level attention skills, greater attentional resources are made available for higher order thinking skills and associated positive learning and quality of life outcomes.

Assistive Technology as an aid to Interpreters

Previous accessible technology research has focused on view consolidation.

Although modern classroom technology has contributed to the visual dispersion of the multiple information sources, technology can reduce these barriers, and benefit everyone, not just deaf participants. An eye-tracking study on deaf students’ gaze during an interpreted lecture (Marschark et al., 2005) noted deaf students spent at least as much time watching the interpreter as compared with hearing students watching the instructor. Also, deaf students spent much less time watching course materials, e.g., slides. As a result, hearing students gain more information in class than deaf students (Marschark et al., 2006). Another disadvantage is that deaf students often rely solely on what they see to gather information. So when instructors do not allow enough time for students to see both the interpreter and the active lecture visual information (Kushalnagar et al., 2010). Often, hearing students depend on auditory cues from the instructor to shift their attention from the instructor to the slides and vice-versa. Unlike hearing students, deaf students cannot depend on auditory cues to decide when to switch from the overhead slides to the interpreter or vice-versa. They also report frustration in having the presenter and participants to accept the presence of the interpreter in highly visible spaces (Kushalnagar & Trager, 2011).

Without the aid of technology, when an interpreter notices the student has looked away, the interpreter pauses and then speeds up and transmits all information that the student has missed by looking away. If there is more than one deaf student in the mainstreamed class, the interpreter generally cannot pause, as it would be impossible to accommodate each student’s attention diversion and will continue to interpret for the majority of the students. Without any accessible technology, the information source not watched would be permanently lost. By providing real-time replay, a student could look at the slide and then go to the interpreter screen and back up 5 seconds to catch any auditory information that they missed and then go back to the current time position.

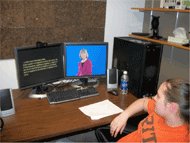

This is a wonderful feature that can be used with any of the screens, but will be primarily used for the interpreter screen. If a student didn’t quite catch the signs, the student can quietly play back to repeat the signs without having to interrupt the interpreter or the classroom to ask for clarification. Figures 3 and 4 illustrate how the deaf student in the foreground has to choose at any given time, one of several simultaneous views: the class work, the interpreter’s visual translation of the instructor, the teacher, the slides, or other classmates. It also illustrates the importance of offering a faster replay speed so that the student can catch up with the lecture and class.

Replay Speed

The seminal research on rates of production of ASL (Bellugi & Fischer, 1972; Grosjean, 1979), few studies have shown that, at normal rates, spoken English words were about half as long as ASL signs, but that the amount of time required to convey a proposition (i.e., a conceptual unit) was the same in the two languages and modalities. The difference in signing rate versus speaking rate is counteracted by the exploitation of the visual modality to permit relatively more simultaneity in the sign modality than in spoken English.

The only study on ASL time compression found was (Heiman & Tweney, 1981) who examined rate effects on perception and comprehension of signing. A decrement of roughly 20-25 percentage points was observed at double speed for both the intelligibility of isolated signs and the comprehension of short, signed passages.

METHODOLOGY

We recorded a two minute long lecture on pre-algebra. We chose this specific kind of lecture because all students were assumed to be familiar with it. The material was neither very dense nor technical, yet was presented in a highly visual and engaging fashion. We developed five different sequential clips of the lecture, ranging from 1 times normal speed to 3 times normal speed, stepping up by 0.5 times speed each time.

We recruited 25 subjects, 10 females and 16 males, ages 18-33. All subjects self-rated themselves as fluent in ASL; 21 of 25 had used ASL for more than 10 years; and the rest had used ASL for more than five years. Prior to the start of the study, each participant was given an opportunity to view a short clip of the video at normal speed. Then for the study, the participants viewed in a randomized, balanced order all video clips.

After each participant watched the entire sequence of videos, similar to the example shown in Figure 5. Then the participant was asked to respond to two preference questions using a Likert scale from 1 to 5, with 1 being very easy to follow, and 5 being very hard to follow. Each participant was asked to rate the views they watched on the basis of the following questions: 1) “Is the playback easy to understand?” and 2) “Explain what was easy to follow?” and 3) “What was hard to follow?”

RESULTS

On a Likert scale rating of 1-5, with 1 being very easy to follow, and 5 being very hard to follow, all students found playback at 1x and 1.5x very easy. The mean weighted Likert score for 1x was 1.36 and for 1.5x, 1.4. There was a slight jump for the weighted Likert score at 2x to 1.92 and then an even larger jump to 2.8 and 3.6 respectively for 2.5x and 3x.

The students’ explanations matched the Likert scores quite well. At both 1 and 1.5x, the qualitative comments from the students all commented that the captions were easy to read. At 2x, most students no longer stated that the captions were easy to follow, but they all concurred that the signs were clear and understandable, with the exception of one student, who said that the caption speed was a bit overwhelming. Then at 2.5x speed, the percentage of students who were able to follow the captions dropped to 30 percent, and many of them stated that they felt compelled to fully attend to the replay. At 3x, only one student reported that they could follow the signs.

CONCLUSION

Most students liked the real-time rewind feature and commented that they would like to use it in their classes to aid in content capture and recall. Students also noted that they preferred to “live playback” captions at their fastest comfort level so that they could always strive to watch the information “live”.REFERENCES

Antia, S. D., Sabers, D. L., & Stinson, M. S. (2007). Validity and reliability of the classroom participation questionnaire with deaf and hard of hearing students in public schools. Journal of deaf studies and deaf education, 12(2), 158–71. doi:10.1093/deafed/enl028

Bellugi, U., & Fischer, S. (1972). A comparison of sign language and spoken language. Cognition, 1(2-3), 173–200. doi:10.1016/0010-0277(72)90018-2

Cavender, A. C., Bigham, J. P., & Ladner, R. E. (2009). ClassInFocus. Proceedings of the 11th International ACM SIGACCESS Conference on Computers and Accessibility - ASSETS ’09 (pp. 67–74). New York, New York, USA: ACM Press. doi:10.1145/1639642.1639656

Grosjean, F. (1979). A study of timing in a manual and a spoken language: American sign language and English. Journal of Psycholinguistic Research, 8(4), 379–405. doi:10.1007/BF01067141

Heiman, G. W., & Tweney, R. D. (1981). Intelligibility and comprehension of time compressed sign language narratives. Journal of psycholinguistic research, 10(1), 3–15. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/7241407

Kushalnagar, R. S., Cavender, A. C., & Pâris, J.-F. (2010). Multiple view perspectives: improving inclusiveness and video compression in mainstream classroom recordings. Proceedings of the 12th international ACM SIGACCESS conference on Computers and accessibility - ASSETS ’10 (pp. 123–130). New York, New York, USA: ACM Press. doi:10.1145/1878803.1878827

Kushalnagar, R. S., & Trager, B. P. (2011). Improving classroom visual accessibility with cooperative smartphone recordings. ACM SIGCAS Computers and Society, 41(2), 51–58. doi:10.1145/2095272.2095277

Lang, H. G. (2002). Higher education for deaf students: Research priorities in the new millennium. Journal of Deaf Studies and Deaf Education, 7(4), 267–280. doi:10.1093/deafed/7.4.267

Marschark, M., Lang, H. G., & Albertini, J. A. (2002). Educating Deaf Students: From Research to Practice. New York: Oxford University Press.

Marschark, M., Leigh, G., Sapere, P., Burnham, D., Convertino, C., Stinson, M., Knoors, H., et al. (2006). Benefits of sign language interpreting and text alternatives for deaf students’ classroom learning. Journal of Deaf Studies and Deaf Education, 11(4), 421–37. doi:10.1093/deafed/enl013

Marschark, M., Pelz, J. B., Convertino, C., Sapere, P., Arndt, M. E., & Seewagen, R. (2005). Classroom Interpreting and Visual Information Processing in Mainstream Education for Deaf Students: Live or Memorex(R)? American Educational Research Journal, 42(4), 727–761. doi:10.3102/00028312042004727

Marschark, M., Sapere, P., Convertino, C., & Pelz, J. (2008). Learning via direct and mediated instruction by deaf students. Journal of Deaf Studies and Deaf Education, 13(4), 546–561. doi:10.1093/deafed/enn014

Mayer, R. E., Heiser, J., & Lonn, S. (2001). Cognitive constraints on multimedia learning: When presenting more material results in less understanding. Journal of Educational Psychology, 93(1), 187–198. doi:10.1037/0022-0663.93.1.187

Mayer, R. E., & Moreno, R. (1998). A split-attention effect in multimedia learning: Evidence for dual processing systems in working memory. Journal of Educational Psychology, 90(2), 312–320. doi:10.1037/0022-0663.90.2.312

National Center for Higher Education Management Systems. (2008). Progress and Completion: Graduation Rates: Six-Year Graduation Rates of Bachelor’s Students: 2008. Retrieved December 3, 2010, from http://www.higheredinfo.org/dbrowser/?level=nation&mode=graph&state=0&submeasure=27

Stinson, M., & Antia, S. (1999). Considerations in educating deaf and hard-of-hearing students in inclusive settings. Journal of deaf studies and deaf education, 4(3), 163–75. doi:10.1093/deafed/4.3.163

ACKNOWLEDGEMENT

The authors thank the RIT Seed Grant and NTID Innovation Grant programs for funding the research.